The promise of Artificial Intelligence on edge devices is transformative. From intelligent sensors in smart factories predicting machinery failure to autonomous drones navigating complex environments, edge AI brings real-time insights and unparalleled responsiveness. However, this powerful paradigm isn’t without its Achilles’ heel: the phenomenon of AI hallucination. For embedded engineers, understanding, detecting, and preventing these “model failures” is paramount to building truly robust and reliable edge AI systems.

The Phantom Menace: What are AI Hallucinations on the Edge?

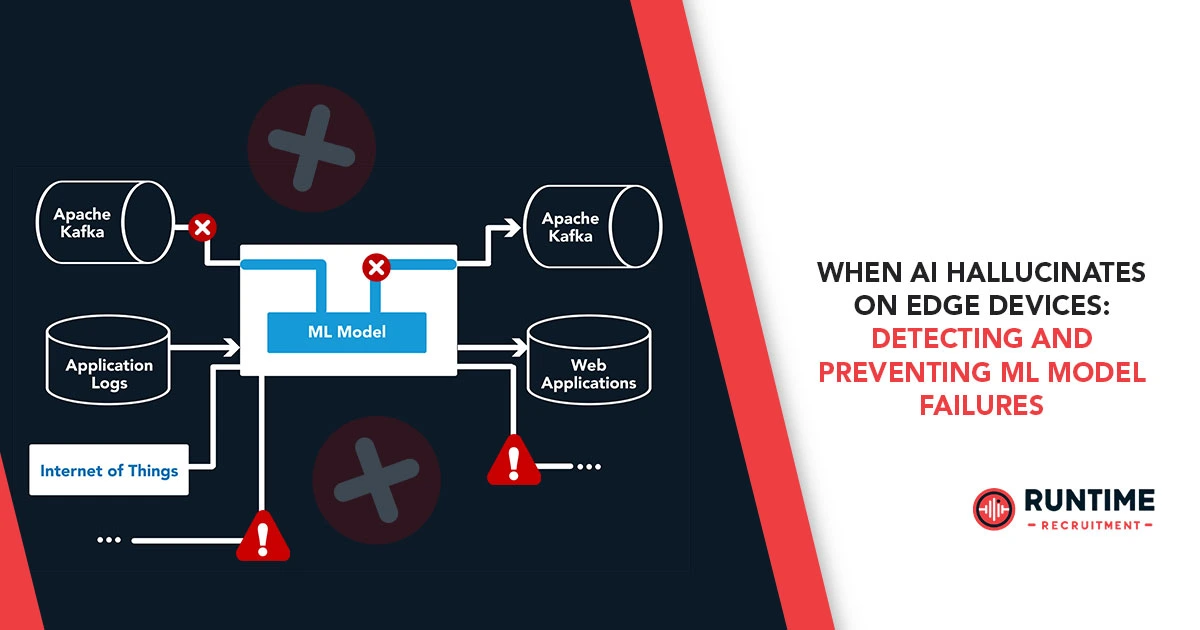

In the context of Machine Learning (ML), a “hallucination” refers to an AI model generating outputs that are plausible but factually incorrect, nonsensical, or deviate significantly from the expected or real-world ground truth. While often discussed in the realm of Large Language Models (LLMs) producing convincing but fabricated text, hallucinations are not limited to generative AI. On edge devices, where models are often compact and operate under strict resource constraints, hallucinations can manifest in various, often subtle, ways:

- Misclassification of objects: A vision system on an autonomous vehicle might misidentify a pedestrian as a lamppost, with potentially catastrophic consequences.

- Anomalous sensor readings: A predictive maintenance system might report a critical anomaly that doesn’t exist, leading to unnecessary shutdowns.

- Incorrect control signals: An industrial robot might receive a faulty command, causing it to perform an unintended action.

- Generating non-existent data: A diagnostic tool might produce reports with fabricated statistics or phantom errors.

The inherent challenge on edge devices is that these hallucinations can occur in real-time, often without immediate human oversight, making their detection and mitigation critical. Unlike cloud-based AI where models can be massive and benefit from extensive post-processing and human-in-the-loop validation, edge AI often demands instant, autonomous decision-making.

Why Do Edge AI Models Hallucinate? The Constraints-Driven Reality

The very conditions that make edge AI attractive – low latency, reduced bandwidth, enhanced privacy – also contribute to its vulnerability to hallucinations. The unique constraints of embedded systems create a fertile ground for ML model failures:

- Limited Resources: Edge devices are typically constrained by processing power, memory, and storage. This necessitates the use of highly optimized, compact models (e.g., quantized models, pruned networks) that might have reduced capacity to generalize or retain nuanced information compared to their larger cloud counterparts (Wevolver, 2025). This simplification can sometimes lead to an over-reliance on learned patterns, making them susceptible to out-of-distribution inputs.

- Data Quality and Variability: Edge devices operate in dynamic, real-world environments. The data they encounter can be noisy, incomplete, corrupted by sensor malfunctions, or simply vary significantly from the data used during training (XenonStack, 2025). If the training data doesn’t adequately represent the diverse conditions an edge device will face, the model might produce erroneous outputs when confronted with novel or slightly different inputs. This includes:

- Insufficient or Biased Training Data: Models trained on limited or unrepresentative datasets are prone to making skewed or incorrect predictions when encountering real-world variability (ServerMania, 2025).

- Outdated Information: If a model is trained on old data and deployed in an environment that has changed, it may generate outputs that are no longer accurate (GPT-trainer, 2025).

- Environmental Factors: Temperature fluctuations, humidity, dust, vibrations, and electromagnetic interference can all affect sensor readings and hardware performance, leading to corrupted input data for the ML model (XenonStack, 2025). A model trained on clean, ideal data might “hallucinate” when confronted with inputs from a compromised sensor.

- Overfitting and Generalization Issues: In an effort to achieve high accuracy on limited training data, models can sometimes overfit, essentially memorizing the training examples rather than learning generalizable patterns (DigitalOcean, 2024). When deployed on an edge device and presented with unseen data, such a model can easily produce wildly inaccurate outputs.

- Intermittent Connectivity: Many edge devices operate with unreliable or intermittent network connections. While this is often a motivation for edge AI (to reduce reliance on the cloud), it also means that models cannot always rely on frequent updates or external validation from cloud services, increasing the risk of “drift” or accumulated errors over time (Google Cloud Blog, n.d.).

- Adversarial Attacks: Though not always classified as “hallucinations,” adversarial attacks are a deliberate form of input perturbation designed to trick ML models into misbehaving. On edge devices, where computational resources for robust defense mechanisms might be limited, these attacks can cause models to “hallucinate” malicious or incorrect interpretations of data.

Detecting the Delusions: Strategies for Identifying Model Failures

Detecting AI hallucinations on edge devices requires a multi-faceted approach, integrating techniques at various stages of the development and deployment lifecycle:

- Anomaly Detection on Outputs:

- Statistical Outlier Detection: Monitor model outputs for values that fall outside expected statistical distributions. This can involve simple thresholding, Z-scores, or more advanced statistical process control techniques.

- Rule-Based Validation: Implement domain-specific rules to check the plausibility of model outputs. For example, if a vision model classifies an object as “cat” but its bounding box is the size of a building, a rule could flag it as an anomaly.

- Consistency Checks: For sequential tasks (e.g., video analysis), check for temporal consistency in model predictions. Sudden, inexplicable changes in classification or regression outputs could indicate a hallucination.

- Input Data Validation:

- Sensor Data Health Monitoring: Continuously monitor sensor health, calibration, and noise levels. Abrupt changes in sensor output characteristics can indicate a hardware issue leading to corrupted input, which in turn can cause model hallucination.

- Data Pre-processing Filters: Implement robust data validation and cleaning steps at the edge. Filter out-of-range values, detect sudden spikes, or utilize simple ML models to pre-classify potentially corrupted inputs before they reach the main inference model.

- Novelty Detection: Employ lightweight novelty or outlier detection algorithms to identify inputs that are significantly different from the training distribution. When such an input is detected, the model’s output should be treated with caution, or a human-in-the-loop intervention could be triggered.

- Model Performance Monitoring (Post-Deployment):

- Drift Detection: Monitor for “model drift,” where the model’s performance degrades over time due to changes in the operating environment or input data distribution. This can be conceptual drift (the relationship between input and output changes) or concept drift (the input data itself changes).

- Confidence Scores: Many ML models provide a confidence score for their predictions. Low confidence scores, especially in critical applications, can serve as an early warning sign of potential hallucinations or uncertain outputs.

- Shadow Deployment/A/B Testing: In less critical applications, a “shadow” model (an older, known-good version) can run in parallel with the deployed model, comparing outputs to identify discrepancies.

- Explainability and Interpretability (XAI) at the Edge: While often resource-intensive, efforts are being made to bring XAI techniques to the edge. Understanding why a model made a particular prediction can help identify when it’s “thinking” incorrectly. This might involve:

- Feature Importance Analysis: Identify which input features most influenced a particular decision. If the model is relying on irrelevant or unexpected features, it could indicate a hallucination.

- Simplified Surrogate Models: Use smaller, interpretable models to approximate the behavior of the main model for specific predictions, providing a more transparent view of its decision-making process.

Preventing the Phantoms: Building Robust Edge AI Systems

Prevention is always better than cure. Embedding robustness from the ground up is essential for mitigating AI hallucinations on edge devices:

- High-Quality, Diverse, and Representative Training Data: This is the bedrock of reliable AI.

- Data Augmentation: Systematically generate variations of existing data (e.g., changing lighting, adding noise, slight rotations for images) to expose the model to a wider range of scenarios it might encounter in the real world.

- Edge Case Collection: Actively collect and incorporate data from challenging edge cases that are likely to cause model confusion.

- Regular Data Refresh: For dynamic environments, periodically update training datasets to reflect new real-world conditions (ServerMania, 2025).

- Robust Model Architectures and Optimization:

- Quantization-Aware Training: When quantizing models for edge deployment (e.g., from FP32 to INT8), use techniques that minimize accuracy loss during the conversion process (Wevolver, 2025).

- Pruning and Knowledge Distillation: Optimize model size and complexity without significantly sacrificing performance by removing redundant connections or transferring knowledge from a larger “teacher” model to a smaller “student” model.

- Uncertainty Quantification: Train models that can inherently estimate their own uncertainty, allowing the system to flag predictions where the model is less confident.

- Hardware-Software Co-design and Environmental Resilience:

- Ruggedized Hardware: Utilize sensors and processing units designed to withstand harsh environmental conditions, minimizing hardware-induced data corruption.

- Hardware Acceleration: Leverage specialized hardware (e.g., NPUs, DSPs, FPGAs) to efficiently run complex models, potentially allowing for less aggressive quantization and better generalization.

- Redundancy and Fault Tolerance: Implement redundant sensors or processing units where possible. If one component fails, the system can switch to a backup or use data from other sources (XenonStack, 2025).

- Hybrid Edge-Cloud Architectures:

- Hierarchical Inference: Perform initial, lightweight inference on the edge. If the confidence is low or an anomaly is detected, offload the data or a subset of it to a more powerful cloud-based model for re-evaluation.

- Federated Learning: Train models collaboratively across multiple edge devices without sharing raw data. This can help models learn from a more diverse dataset while maintaining data privacy.

- Over-the-Air (OTA) Updates: Implement robust mechanisms for securely updating models and software on edge devices to address performance degradation or newly identified hallucination patterns.

- Human-in-the-Loop (HITL) Validation: Even with advanced automation, human oversight remains crucial.

- Alerting Mechanisms: Design systems to alert human operators when potential hallucinations are detected, allowing for manual review and intervention.

- Feedback Loops: Establish clear pathways for human feedback to be incorporated back into the model retraining process, continuously improving performance and reducing future hallucinations (ServerMania, 2025).

- Retrieval-Augmented Generation (RAG) for Factual Consistency: For generative tasks on the edge (e.g., generating summaries or diagnostic reports), RAG can be invaluable. By giving the AI access to a curated, factual database, it can ground its responses in verified information, significantly reducing fabricated outputs (ServerMania, 2025). While often associated with larger LLMs, the principles of retrieving relevant, verified information can be applied to smaller, domain-specific models on the edge to enhance factual accuracy.

The journey towards truly reliable edge AI is continuous. As embedded engineers, we are at the forefront of this evolution, tasked with designing and deploying systems that are not just intelligent but also trustworthy. The detection and prevention of AI hallucinations are not merely an academic exercise; they are fundamental to the safety, efficiency, and public acceptance of AI in critical real-world applications.

Embrace these challenges as opportunities to innovate. The solutions lie in a deep understanding of both the AI models and the unique constraints of embedded systems. Collaborate with data scientists, hardware designers, and domain experts to build robust pipelines, from data collection and model training to deployment and continuous monitoring.

Are you an embedded engineer passionate about pushing the boundaries of edge AI and building systems that stand up to the toughest challenges? If you’re ready to tackle the complexities of AI reliability and contribute to the next generation of intelligent edge devices, we want to hear from you.

Connect with RunTime Recruitment today to explore how your expertise can make a real-world impact. Visit our website or reach out to our specialist recruiters to discuss your next career move.