The rise of the Internet of Things (IoT) has driven an explosion of data generated by intelligent devices at the network’s edge. This real-time data holds immense potential for driving insights and enabling autonomous decision-making. Embedded engineers are at the forefront of this revolution, tasked with designing systems that can harness the power of AI at the edge. But what exactly is Edge AI, and how can it be effectively implemented in resource-constrained embedded systems? This comprehensive guide delves into the world of Edge AI, equipping embedded engineers with the knowledge and strategies to build efficient and intelligent solutions.

Edge AI in Embedded Systems

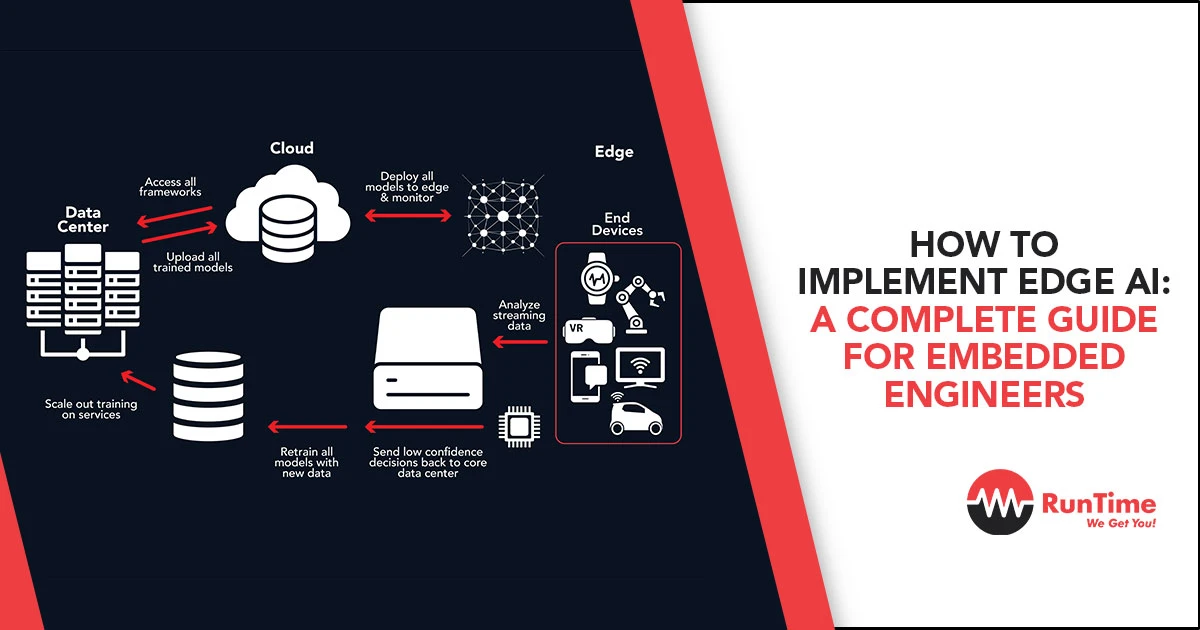

Edge AI refers to the processing of AI algorithms directly on devices located at the edge of a network, as opposed to relying on centralized cloud servers. This distributed approach offers several advantages:

- Reduced Latency: By processing data locally, Edge AI eliminates the need for transmission to a cloud server, significantly reducing latency. This is critical for applications requiring real-time responses, such as autonomous vehicles or industrial process control.

- Improved Bandwidth Efficiency: Local processing minimizes the amount of data transmitted across the network, alleviating bandwidth limitations and associated costs.

- Enhanced Privacy and Security: Sensitive data can be processed on-device, mitigating security risks associated with cloud storage and transmission.

The applications of Edge AI in embedded systems are vast and constantly evolving. Here are a few examples:

- Predictive Maintenance: In industrial settings, sensor data from machinery can be analyzed by Edge AI models to predict potential failures and enable proactive maintenance, minimizing downtime and optimizing resource allocation.

- Smart Wearables: Edge AI can power intelligent features in wearables, like activity recognition, anomaly detection, and personalized health insights, all while preserving user privacy by processing data on-device.

- Smart Robotics: Robots equipped with Edge AI can analyze their environment in real-time, enabling them to navigate autonomously, adapt to changing conditions, and interact with objects more effectively.

Mainstream Integration of Edge AI

The integration of Edge AI is fundamentally transforming the landscape of embedded system design. Traditionally, embedded systems focused on functionalities requiring minimal processing power. Now, engineers must grapple with new challenges:

- Resource Constraints: Embedded systems typically have limitations in terms of processing power, memory, and battery life. Implementing AI models on such devices necessitates careful optimization to ensure efficient performance.

- Security Concerns: As Edge AI devices collect and process potentially sensitive data, robust security measures are crucial to prevent unauthorized access and data breaches.

- Development Complexity: Integrating AI models into embedded systems introduces new development considerations, such as model selection, optimization techniques, and specialized tools for deployment and testing.

However, these challenges are outweighed by the exciting possibilities unlocked by Edge AI. Embedded systems can now become more intelligent and autonomous, capable of real-time decision-making and adaptation to their environments. This opens doors for innovative applications that were previously unimaginable.

Data Management in Edge AI

Effective data management is paramount for successful Edge AI implementations. The success of AI models hinges on the quality and relevance of the data they are trained on. Here’s how to optimize data management for Edge AI:

- Data Pre-processing: Raw sensor data often requires pre-processing to remove noise, extract features, and transform it into a format suitable for the chosen AI model. This pre-processing can be performed on-device or off-device, depending on computational constraints.

- Data Filtering and Compression: Edge devices often generate large volumes of data. Techniques like data filtering and compression can be employed to reduce the amount of data stored and transmitted, optimizing resource utilization and bandwidth efficiency.

- Efficient Storage: Choosing the right storage solution for Edge AI applications is crucial. Embedded devices often have limited storage capacity, so engineers must consider options like flash memory or external storage solutions depending on the application’s needs.

Building Effective Edge AI Solutions

Having a grasp of data management strategies, we can now delve into the nitty-gritty of building Edge AI applications. Here are some key considerations for embedded engineers:

- Framework Selection: Several frameworks cater specifically to Edge AI development, offering pre-optimized libraries and tools for deploying AI models on resource-constrained devices. Popular options include TensorFlow Lite, Edge Impulse, and Arm NN. The choice of framework depends on factors like the target hardware platform, the desired level of customization, and the supported AI model types.

- Model Optimization Techniques: AI models trained for cloud deployment often need significant optimization before running efficiently on Edge devices. Techniques like quantization (reducing the number of bits used to represent data) and pruning (removing redundant connections) can significantly reduce model size and computational complexity without sacrificing accuracy.

- Development Tools: Several tools streamline the development process for Edge AI applications. These tools may include simulators for testing and debugging models on emulated hardware, profilers for identifying performance bottlenecks, and code generation tools for porting models to specific hardware platforms.

Enhancing Edge AI Workflows

Beyond the core development aspects, optimizing the entire workflow for Edge AI applications is crucial. Here’s how to streamline the process:

- Continuous Integration and Delivery (CI/CD): Implementing a CI/CD pipeline automates the testing, deployment, and update process for Edge AI models. This ensures rapid iteration and efficient deployment of updates to edge devices.

- Testing and Debugging: Testing and debugging Edge AI models can be challenging due to resource limitations and real-world deployment scenarios. Utilizing tools like unit tests, hardware-in-the-loop simulations, and remote debugging capabilities can expedite this process.

- Model Monitoring and Updating: Real-world data can lead to model degradation over time. Techniques like federated learning allow for on-device model updates without compromising user privacy, ensuring models remain accurate and effective.

Hardware Considerations for Edge AI Deployment

Now that we’ve explored development considerations, let’s shift focus to the hardware that underpins Edge AI applications.

- Hardware Selection and Constraints: The hardware platform significantly impacts the capabilities and limitations of Edge AI implementations. Key factors to consider include:

- Processing Power: The computational demands of the AI model heavily influence the required processing power of the chosen hardware. Embedded engineers must strike a balance between performance and power consumption.

- Memory Constraints: Limited memory on Edge devices necessitates careful memory management during model deployment and execution. Techniques like model pruning and quantization can help alleviate memory limitations.

- Power Efficiency: Battery life is often a critical concern for Edge devices. Low-power processors and hardware optimizations are essential for ensuring long-lasting operation.

Common hardware platforms for Edge AI include:

- Microcontrollers (MCUs): Low-power MCUs are suitable for simple AI models requiring minimal processing power.

- Field-Programmable Gate Arrays (FPGAs): FPGAs offer high customizability and can be optimized for specific AI algorithms, but require more development expertise.

- Application Specific Integrated Circuits (ASICs): ASICs provide the highest performance and efficiency but are expensive and require significant upfront investment.

Selecting and Training AI Models for Edge Devices

The choice of AI model significantly impacts the feasibility and effectiveness of Edge AI implementations. Here’s how to select and train models for Edge deployment:

- Model Size and Complexity: Smaller, less complex models with lower computational requirements are better suited for Edge devices. Techniques like pruning and quantization can further optimize model size for deployment.

- Accuracy vs. Efficiency Trade-off: There’s a trade-off between model accuracy and computational efficiency. Carefully evaluate the application’s specific needs to determine the acceptable level of accuracy for an Edge-deployable model.

- Transfer Learning: Leveraging pre-trained models and fine-tuning them on smaller, device-specific datasets can be an effective approach for Edge AI applications. This reduces training time and computational resources required for model development.

Navigating Challenges

While Edge AI offers immense potential, it also presents unique technical and security challenges that embedded engineers must address:

- Resource Limitations: Edge devices often have limited processing power, memory, and battery life. Techniques like model optimization, efficient data management, and choosing the right hardware platform are crucial for overcoming these constraints.

- Real-Time Processing Demands: Many Edge AI applications require real-time processing and decision-making. Careful consideration of model latency and hardware performance is essential to ensure timely responses.

- Limited Connectivity: Edge devices may operate in areas with unreliable or limited network connectivity. Implementing techniques like offline training and local storage for models becomes crucial in such scenarios.

Security Considerations for Edge AI

Security is paramount for Edge AI deployments, as these devices often collect and process sensitive data. Here are some key security considerations:

- Data Privacy: Protecting user privacy is essential. Techniques like anonymization and on-device data processing can help mitigate risks.

- Device Security: Edge devices are vulnerable to hacking attempts. Secure boot, secure communication protocols, and robust authentication mechanisms are crucial to safeguard these devices.

- Model Security: AI models themselves can be vulnerable to adversarial attacks. Implementing techniques like model verification and intrusion detection can help ensure model integrity.

Final Word

The future of embedded systems is inextricably linked to the continued evolution of Edge AI. As Edge AI technologies mature and hardware capabilities improve, we can expect even more innovative and intelligent applications to emerge. By embracing the challenges and opportunities presented by Edge AI, embedded engineers can play a pivotal role in shaping this exciting future.

This comprehensive guide has equipped you with the foundational knowledge and practical strategies to embark on your Edge AI development journey. Remember, the key lies in understanding the unique constraints of embedded systems, optimizing models and workflows for efficiency, and prioritizing security throughout the development process. As you delve deeper into this domain, keep exploring new tools, frameworks, and best practices to stay ahead of the curve and unlock the transformative potential of Edge AI in your embedded engineering projects.

Hire the Best Engineers with RunTime

At RunTime, we are dedicated to helping you find the best Control Systems Engineering talent for your recruitment needs. Our team consists of engineers-turned-recruiters with an extensive network and a focus on quality. By partnering with us, you will have access to great engineering talent that drives innovation and excellence in your projects.

Discover how RunTime has helped 423+ tech companies find highly qualified and talented engineers to enhance their team’s capabilities and achieve strategic goals.

On the other hand, if you’re a control systems engineer looking for new opportunities, RunTime Recruitment’s job site is the perfect place to find job vacancies.