The embedded systems landscape is in the midst of a tectonic shift. For decades, embedded engineers have meticulously crafted hardware description language (HDL) code—be it Verilog or VHDL—to define the behavior of FPGAs and ASICs. This is a craft that demands deep expertise, an intimate understanding of hardware architecture, and a painstaking attention to detail. HDL isn’t like writing C code; it’s a parallel universe where every line defines not a sequence of instructions, but the physical connections and logic of a circuit. It’s a world of flip-flops, state machines, and timing constraints, where a single misplaced semicolon can render an entire design useless.

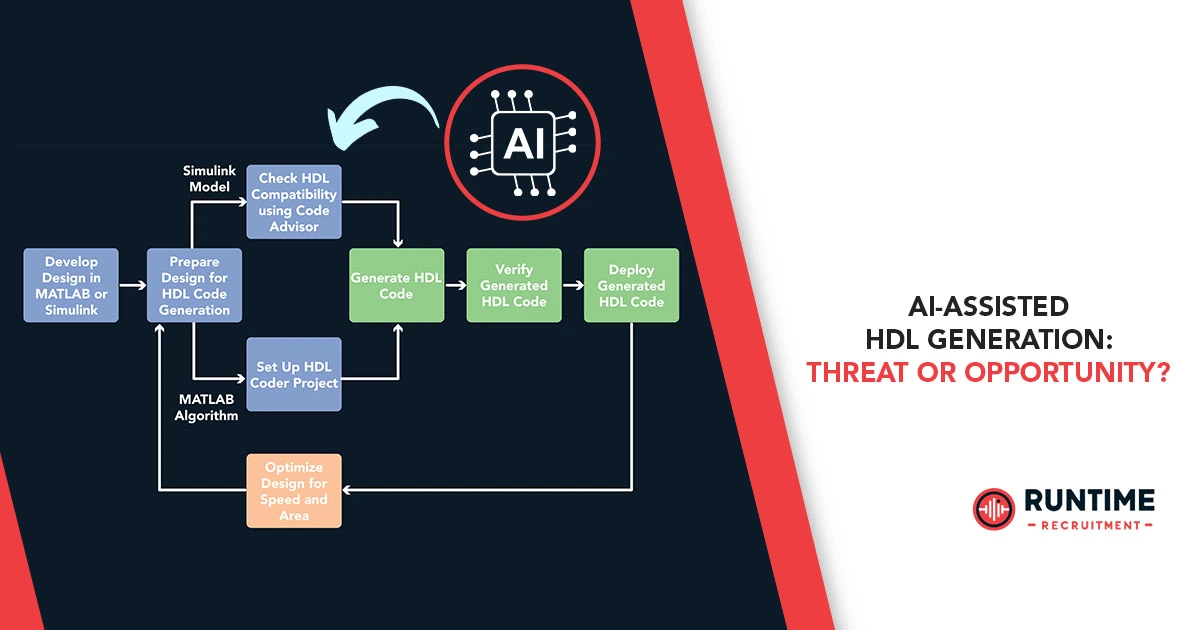

But a new player has entered the arena: AI-assisted HDL generation. Tools powered by large language models (LLMs), similar to the ones that write prose or code in other domains, are now being trained on vast repositories of hardware designs. They can, with a simple text prompt, generate complex RTL (Register-Transfer Level) code, synthesize it, and even suggest optimizations.

This has sparked a lively debate within the embedded engineering community. Is this a powerful new tool that will accelerate innovation and free engineers from tedious, repetitive tasks? Or is it a dangerous shortcut that will de-skill the workforce, introduce subtle yet catastrophic bugs, and erode the very foundation of our profession? The answer, as with most things in this field, is complex and lies somewhere in between. It is both a threat and an opportunity, and the way we navigate this duality will define the future of our industry.

The Case for Opportunity: A Paradigm Shift in Productivity

The most compelling argument for AI-assisted HDL generation is its potential to revolutionize productivity. For engineers, especially those on tight deadlines, the prospect of automating significant portions of the design process is incredibly appealing. Think of the time and effort spent writing boilerplate code for common modules like FIFOs, DMA controllers, or simple state machines. An AI tool can generate a functional starting point for these components in seconds, allowing the engineer to focus on the high-level architecture and critical, complex parts of the design.

This isn’t about replacing engineers; it’s about augmenting their capabilities. Consider an engineer who needs to integrate a new peripheral into an existing design. Instead of poring over datasheets and manually writing the interface logic, they can use an AI assistant to generate the core code, and then spend their time verifying, optimizing, and ensuring the interface meets all timing requirements. This shifts the role of the engineer from a code-writer to a system architect and verifier—a more strategic and valuable position.

Moreover, AI can democratize hardware design. The steep learning curve of HDLs and the intricate knowledge required to design for FPGAs has traditionally been a barrier to entry for many software engineers. An AI tool can act as a bridge, allowing those with a background in high-level programming to explore the world of hardware acceleration. They can describe their desired functionality in natural language and have the AI translate it into a synthesizable HDL. This opens up a new talent pool and fosters a more collaborative, multi-disciplinary approach to embedded systems development.

AI tools can also become invaluable for legacy code maintenance and design exploration. Imagine a legacy VHDL design with poor documentation. An AI can analyze the code, infer its function, and provide a clear, human-readable summary. When exploring new architectures, an AI can quickly generate and test multiple design variations, providing a rapid prototyping capability that would be impossible with manual effort. This accelerated exploration can lead to more efficient and innovative designs.

Finally, AI is proving to be a powerful tool for bug detection and verification. By analyzing vast datasets of successful and failed hardware designs, AI models can learn to identify common pitfalls and subtle bugs that often elude human designers. They can suggest corrections and even generate test benches, automating a part of the workflow that is notoriously time-consuming and labor-intensive. This is not just about making our lives easier; it’s about making our designs more reliable and robust.

The Case for Threat: The De-skilling and Bug-Filled Horizon

While the opportunities are vast, the threats posed by AI-assisted HDL generation are equally significant and cannot be ignored. The primary concern is the potential for de-skilling. If engineers become overly reliant on AI to write their code, will they lose the fundamental understanding of how hardware works at the RTL level? Will the next generation of engineers truly grasp the intricacies of clock domains, reset logic, and pipelining if an AI tool handles all the heavy lifting? This erosion of foundational knowledge could be catastrophic. An engineer who can’t debug a subtle timing violation or a multi-cycle path because they never learned how to write the code in the first place is a liability, not an asset.

Then there’s the issue of hallucinations and subtle bugs. While LLMs are powerful, they are not infallible. They can produce code that is syntactically correct but functionally flawed. These bugs are particularly insidious because they may only manifest under specific, rare conditions, making them extremely difficult to detect in simulation or with formal verification. The black-box nature of many of these AI models means that when a bug does appear, it’s a “why?” that can be impossible to answer, as there’s no clear chain of reasoning behind the AI’s output. A human engineer can trace their own logic to find an error, but an AI’s logic is often opaque and non-deterministic.

The training data for these AI models is another major concern. Many are trained on public repositories, which may contain flawed or sub-optimal designs. The AI, unaware of the quality of its training data, may simply replicate these bad design patterns, propagating errors and inefficient architectures across new projects. There are also intellectual property (IP) and licensing concerns. If an AI model is trained on proprietary or licensed code, and then generates new code based on that data, who owns the output? What are the legal ramifications? This is a legal minefield that has yet to be fully explored.

Furthermore, the integration of these AI tools into existing design flows is not always seamless. Tool chains are complex, with specific requirements for synthesis, simulation, and place-and-route. An AI-generated piece of code might work perfectly in a simple test bench but fail spectacularly when integrated into a larger, more complex system due to incompatible interfaces or unmet timing constraints. The engineer is still responsible for making the final design work, and debugging an AI’s output can be far more challenging than debugging one’s own.

Finally, there’s the question of security. Just as AI can be used to find bugs, it can also be used to introduce malicious logic into a design. A clever adversary could use a compromised AI tool to inject backdoors or hardware Trojans into a seemingly innocuous module. This is a terrifying prospect, especially in mission-critical applications like automotive, aerospace, and medical devices. The trust we place in these AI systems must be carefully weighed against the potential for their misuse.

Navigating the Duality: A Hybrid Future for Embedded Engineering

The future of embedded engineering in the age of AI-assisted HDL generation is not a binary choice between “threat” or “opportunity.” It is a synthesis of both. The successful embedded engineer of tomorrow will not be the one who ignores AI, nor the one who blindly trusts it. They will be the one who understands how to leverage AI as a co-pilot, a powerful assistant that takes care of the mundane so they can focus on the truly challenging and creative aspects of their work.

This means a new emphasis on a few key areas:

1. Mastering the Fundamentals

AI is not an excuse to skip the hard parts of learning. If anything, it makes the foundational knowledge of digital logic, computer architecture, and HDLs even more critical. Engineers must be able to critically evaluate the code an AI generates, understand its potential pitfalls, and, when necessary, debug and correct it. The role shifts from writer to editor, but a good editor needs to understand grammar, syntax, and meaning at a profound level.

2. Verification and Validation as Core Competencies

As the complexity and potential for subtle errors increase with AI-generated code, so too does the importance of verification. Engineers must become masters of simulation, formal verification, and hardware-in-the-loop testing. They need to be able to design comprehensive test benches that can stress the design in ways an AI might not have considered. The “human in the loop” becomes a crucial verifier and validator, the ultimate arbiter of quality and correctness.

3. Ethical and Security Awareness

Engineers must be acutely aware of the ethical and security implications of using AI in their designs. They must understand the provenance of the training data, the potential for IP infringement, and the risk of malicious code injection. This requires a new layer of diligence and a commitment to using AI tools responsibly and securely.

4. A Focus on High-Level System Design

By automating low-level coding, AI frees engineers to think more about the big picture. This means more time for system-level architecture, performance optimization, power management, and safety-critical design. The role of the embedded engineer will evolve to be more strategic, focusing on how different hardware and software components interact to create a cohesive, high-performing, and reliable system.

Conclusion: The HDL Generation is in Our Hands

AI-assisted HDL generation is not a threat to the embedded engineering profession itself, but to those who fail to adapt. It is a powerful new tool, one that will undoubtedly change how we work, but it will not replace the creativity, intuition, and deep expertise of a skilled engineer. The future belongs to those who can master both the old and the new, who can use AI to build better, faster, and more complex systems while maintaining a firm grasp on the underlying principles of hardware design. The opportunity to innovate and accelerate development is immense, but it comes with a new responsibility: to ensure that we use this technology wisely, ethically, and with a steadfast commitment to quality and security.

This is an exciting and challenging time to be an embedded engineer. The conversation is just beginning, and the future of hardware design is being written right now. It’s time to embrace the change, learn the new tools, and continue to build the next generation of intelligent, connected, and reliable systems.

If you’re an embedded engineer with a passion for innovation and a desire to shape the future of hardware design, it’s time to connect with the experts at RunTime Recruitment.

We specialize in placing talented professionals like you in roles that are at the forefront of technological change. Don’t just watch the future unfold—build it. Reach out to RunTime Recruitment today to find your next great opportunity!