The hum of a well-tuned motor, the precise articulation of a robotic arm, the smooth acceleration of an electric vehicle – these are testaments to the sophisticated dance between hardware and software in the embedded world. For decades, embedded engineers have meticulously crafted control algorithms, often relying on classical control theory, finite state machines, and heuristic rules. These systems, while incredibly effective, are typically deterministic and their behavior, while complex, is ultimately understandable and traceable.

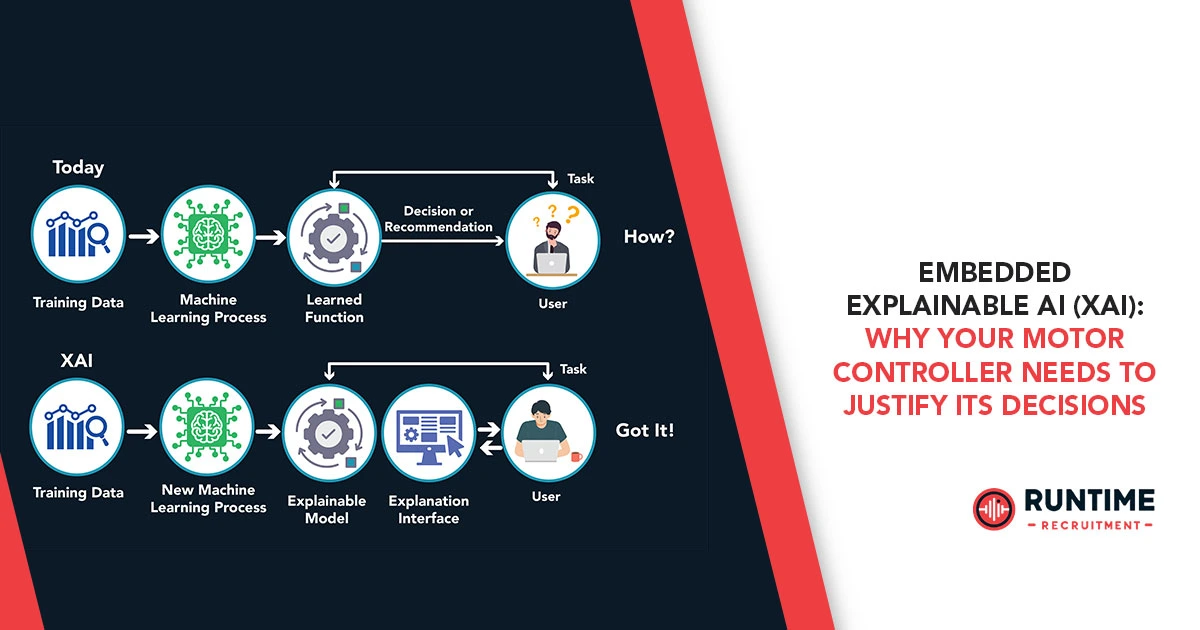

However, a new paradigm is rapidly emerging: embedded Artificial Intelligence (AI). From predictive maintenance to adaptive control, AI offers unprecedented capabilities for optimizing performance, extending lifespan, and achieving new levels of autonomy. Yet, as AI models, particularly deep learning networks, infiltrate the very core of our embedded systems, a critical challenge arises: the “black box” problem. When an AI-powered motor controller makes a decision – perhaps to unexpectedly reduce power, or to slightly alter a trajectory – and the reasons behind that decision are opaque, it creates a chasm of trust and a significant hurdle for debugging, safety certification, and ongoing optimization.

This is where Embedded Explainable AI (XAI) enters the scene, not as a luxury, but as an absolute necessity. It’s no longer enough for your motor controller to simply make the right decision; it increasingly needs to justify it.

The Rise of AI in Embedded Motor Control

Before diving into XAI, let’s briefly acknowledge the compelling reasons why AI is finding its way into motor control in the first place.

- Adaptive Control: Traditional PID controllers, while robust, often struggle with non-linearities, parameter variations, and unknown disturbances. AI, particularly reinforcement learning (RL) and neural networks, can learn optimal control policies online, adapting to changing load conditions, motor degradation, or environmental factors.

- Predictive Maintenance: AI models can analyze sensor data (current, voltage, temperature, vibration) to detect subtle anomalies indicative of impending failure, allowing for proactive maintenance and preventing costly downtime. Imagine a motor controller not just running the motor, but also predicting its own demise with remarkable accuracy.

- Efficiency Optimization: AI can explore vast parameter spaces to find optimal operating points for energy efficiency, minimizing losses across various load profiles.

- Anomaly Detection: Identifying unexpected behavior, whether it’s a sensor malfunction or a mechanical issue, becomes significantly more sophisticated with AI, differentiating true anomalies from normal operational variations.

- System Identification: AI can learn accurate models of complex motor dynamics, which can then be used to design more effective controllers or simulators.

These benefits are transformative, promising motor systems that are smarter, more resilient, and more efficient than ever before. But with great power comes great responsibility – and the need for profound understanding.

The Black Box Problem: A Silent Threat to Embedded Systems

The “black box” refers to an AI model whose internal workings are so complex that its decisions are difficult, if not impossible, for a human to understand directly. This is particularly true for deep neural networks, with their millions of interconnected parameters and non-linear activation functions. When such a model is embedded in a motor controller:

- Debugging Becomes a Nightmare: If a motor behaves unexpectedly, how do you debug an AI that can’t tell you why it did what it did? Was it a faulty sensor input? A learned anomaly? A rare edge case it misinterpreted? Without explainability, you’re left with trial and error, a costly and time-consuming process.

- Safety and Certification are Compromised: In safety-critical applications like automotive, aerospace, or industrial robotics, regulatory bodies demand rigorous proof of safe operation. How can you certify a system if its core decision-making component cannot explain its actions? The “black box” actively hinders compliance with standards like ISO 26262 for functional safety.

- Lack of Trust and Acceptance: Operators, engineers, and even end-users are less likely to trust a system they don’t understand. If a robotic arm makes a sudden, unexplained movement, or an industrial pump reduces flow without a discernible reason, confidence erodes.

- Difficulty in Optimization and Improvement: Without knowing why an AI model performs sub-optimally in certain scenarios, it’s incredibly challenging to improve it. Is the training data incomplete? Is the model architecture flawed? Explainability provides the crucial feedback loop for iterative refinement.

- Intellectual Property and Knowledge Transfer: The insights learned by an AI remain locked within its parameters. Extracting human-understandable rules or insights, which could be valuable intellectual property or aid in knowledge transfer, becomes impossible.

For embedded engineers, who are inherently pragmatic and demand transparency and control, the black box problem is not merely an academic curiosity; it’s a significant impediment to deploying advanced AI solutions responsibly.

What is Embedded Explainable AI (XAI)?

Embedded XAI is the field dedicated to developing methods and techniques that allow AI models running on resource-constrained embedded hardware to provide insights into their decision-making processes in a human-understandable way. It’s about opening the black box, not necessarily by understanding every single neuron, but by providing actionable explanations relevant to the application.

The goal isn’t just to make AI “interpretable” in a general sense, but to make it actionably explainable within the tight constraints of embedded systems. This means:

- Resource Efficiency: XAI methods must be lightweight, demanding minimal additional computation, memory, and power, as embedded systems often operate with strict budgets.

- Timeliness: Explanations must be generated quickly, ideally in real-time or near real-time, to be useful for online monitoring, debugging, or reactive safety systems.

- Contextual Relevance: Explanations should be tailored to the embedded domain – for a motor controller, this might mean relating decisions to current, voltage, speed, load, or temperature, rather than abstract feature importance.

- Human Understandability: The explanations must be digestible by an engineer, operator, or even a regulatory body, often in the form of rules, feature importances, counterfactuals, or visual cues.

Key XAI Techniques for Embedded Systems

While the broader field of XAI is vast, specific techniques are more amenable to embedded deployment:

1. Post-Hoc vs. Ante-Hoc Explainability

- Ante-Hoc (Inherently Interpretable Models): These models are designed from the ground up to be explainable. Examples include:

- Decision Trees/Random Forests: Each decision path is a clear rule. While individual trees are explainable, large forests can become complex.

- Rule-Based Systems: Explicitly defined rules are inherently transparent.

- Linear Models: The weight coefficients directly indicate the importance and direction of influence for each input feature.

- Symbolic AI: AI systems that manipulate symbols and logical expressions can provide step-by-step reasoning.

- Pros for Embedded: Often simpler, faster inference, and directly provide explanations.

- Cons for Embedded: May not achieve the same level of accuracy as deep learning for complex tasks.

- Post-Hoc (Explanation of Black Box Models): These methods attempt to explain decisions after a complex black box model has made them. This is often necessary when leveraging the power of deep learning.

- Local Interpretable Model-agnostic Explanations (LIME): LIME works by perturbing a single input instance and observing how the black box model’s prediction changes. It then trains a simple, interpretable model (like a linear regressor) on these perturbed samples and their corresponding black box predictions, effectively creating a local approximation around the decision point. This local model’s features (e.g., specific sensor readings) can then be highlighted as important for that particular decision.

- SHapley Additive exPlanations (SHAP): Rooted in cooperative game theory, SHAP attributes the “credit” for a prediction among all input features. It calculates the marginal contribution of each feature to the prediction across all possible coalitions of features. This provides a fair and consistent way to understand feature importance for individual predictions. SHAP values can be computationally intensive but offer powerful insights.

- Feature Importance/Sensitivity Analysis: For neural networks, this can involve observing how the output changes when specific input features are slightly varied. This can reveal which sensor inputs have the most significant impact on the controller’s decisions.

- Activation Maximization/Visualization: While more applicable to computer vision, similar principles can be used to understand what patterns in sensor data maximally activate certain neurons or layers in an embedded network, revealing learned features.

- Counterfactual Explanations: “What if” scenarios. For example, “The motor reduced speed because if the current had been 0.5A lower, it would have maintained speed.” These require defining plausible “counterfacts” and running them through the model.

- Pros for Embedded: Can be applied to powerful deep learning models.

- Cons for Embedded: Can be computationally more expensive, requiring careful optimization for real-time applications.

2. Model Compression and Distillation for Explainability

A common strategy is to train a complex, powerful black-box model (the “teacher”) offline and then use it to train a smaller, more interpretable model (the “student”) for deployment on the embedded system. The student model can then be inherently explainable or more amenable to post-hoc XAI techniques due to its reduced complexity. This is particularly useful for motor control, where a large neural network might predict optimal control parameters, which are then passed to a simpler, rule-based or linear controller for execution.

3. Human-in-the-Loop XAI

Embedded systems, especially those in safety-critical domains, often benefit from human oversight. XAI can facilitate this by:

- Alerting with Explanations: If the motor controller detects an anomaly and makes an unusual decision, it can trigger an alert that includes a brief explanation of why it acted that way (e.g., “reduced power due to excessive winding temperature prediction based on current spikes”).

- Interactive Debugging: Providing tools that allow engineers to query the AI model’s decisions post-mortem, asking “why did you do X?” and receiving a concise, data-backed explanation.

- Trust Calibration: Over time, consistent and accurate explanations build trust, allowing human operators to better understand the system’s capabilities and limitations.

The Embedded Engineer’s Imperative: Adopting XAI

For embedded engineers, embracing XAI is not just about keeping up with the latest trends; it’s about future-proofing designs, ensuring safety, and unlocking the full potential of AI in resource-constrained environments.

- Prioritize Explainability from Design Onset: Don’t treat XAI as an afterthought. When selecting AI models and architectures for motor control, consider their inherent explainability. Can a simpler, interpretable model achieve sufficient performance? If not, plan for post-hoc explanation methods during the design phase.

- Understand Your Explanandum: What kind of explanation is most valuable for your specific application? For safety, perhaps counterfactuals are key. For debugging, feature importance might be more useful. Tailor your XAI approach to the needs of your stakeholders (developers, operators, certifiers).

- Benchmark XAI Overhead: Crucially, evaluate the computational, memory, and power overhead introduced by XAI techniques on your target hardware. Real-time constraints are paramount. Techniques like LIME or SHAP can be resource-intensive; explore optimized, lightweight versions or offline explanation generation if real-time explanations aren’t strictly necessary.

- Integrate XAI into Your Toolchain: Develop or adopt tools that seamlessly integrate XAI output into your existing debugging, monitoring, and simulation environments. Visualizations of feature importance, decision paths, or anomaly explanations can be invaluable.

- Data Quality and Feature Engineering are Still King: Even with XAI, the quality of your input data and the relevance of your engineered features profoundly impact model performance and explainability. Garbage in, garbage out – and an unexplainable mess out.

- Iterate and Validate Explanations: Just as you validate your control algorithms, you must validate your explanations. Do they truly reflect the model’s reasoning? Are they understandable to the target audience? Human-in-the-loop feedback is critical here.

The Future of Embedded Motor Control is Transparent

Imagine a future where a motor controller, equipped with advanced AI, not only flawlessly executes complex motion profiles but also proactively alerts an engineer: “Reduced motor speed by 10% because winding temperature is approaching critical thresholds, exacerbated by increased load current on phase B, indicating potential bearing wear. Recommend inspection within 24 hours.”

This isn’t science fiction; it’s the promise of Embedded XAI. It transforms the opaque black box into a transparent, collaborative partner. It empowers engineers to trust, verify, and ultimately, innovate more rapidly and safely with AI at the heart of their designs. The benefits extend beyond individual projects, paving the way for wider adoption of AI in safety-critical embedded domains, accelerating progress in robotics, autonomous vehicles, and smart manufacturing.

The transition from purely deterministic control to AI-powered adaptive control is a monumental shift. Ensuring that this shift is accompanied by clarity and understanding, rather than opacity and mistrust, is the core mission of Embedded XAI. Your motor controller, and indeed all your embedded AI systems, deserve to justify their decisions.

Ready to build the next generation of explainable embedded systems?

Connect with RunTime Recruitment – we specialize in connecting top-tier embedded engineers with innovative companies pushing the boundaries of AI and control.