Introduction

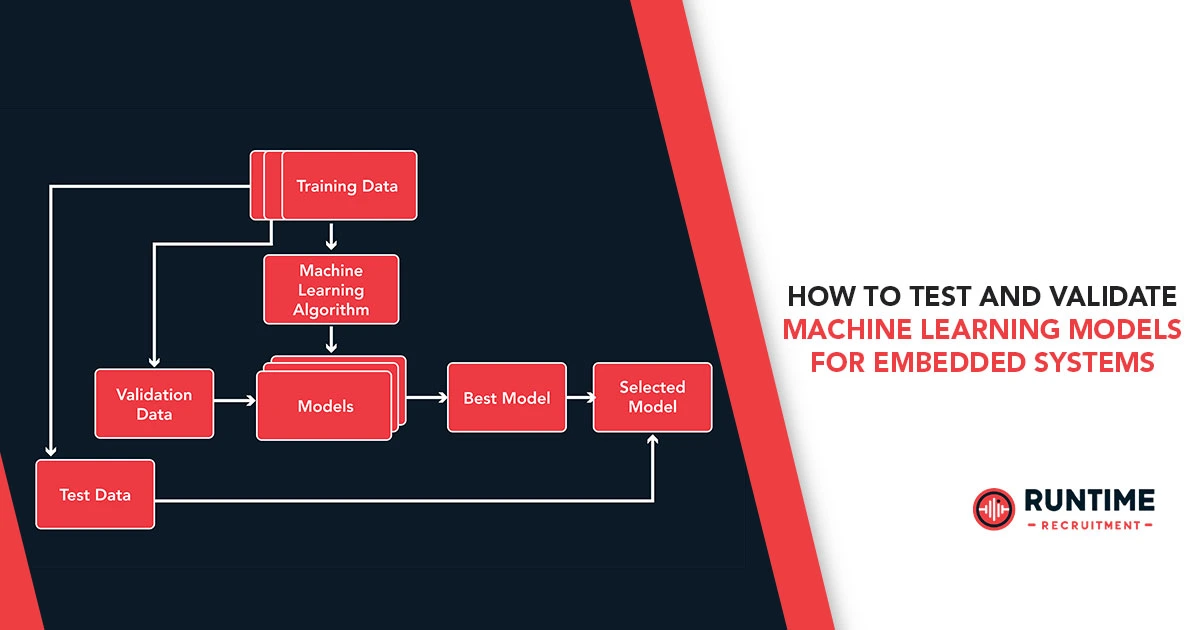

Machine learning (ML) is increasingly being deployed in embedded systems, from microcontrollers in IoT devices to high-performance edge computing platforms. However, testing and validating ML models for embedded environments presents unique challenges due to resource constraints, real-time requirements, and hardware dependencies.

Unlike traditional software, ML models introduce probabilistic behavior, making deterministic testing difficult. Additionally, embedded systems often have limited memory, processing power, and energy budgets, requiring specialized validation techniques.

This article explores best practices for testing and validating ML models in embedded systems, covering:

- Understanding the Challenges of ML in Embedded Systems

- Data Collection and Preprocessing for Embedded ML

- Model Optimization for Embedded Deployment

- Testing Methodologies for Embedded ML Models

- Validation Strategies for Real-World Performance

- Tools and Frameworks for Testing Embedded ML

- Case Studies and Lessons Learned

By the end, embedded engineers will have a structured approach to ensuring their ML models are robust, efficient, and reliable in production.

Understanding the Challenges of ML in Embedded Systems

Before diving into testing methodologies, it’s crucial to recognize the constraints and challenges of deploying ML in embedded environments:

A. Resource Constraints

- Limited Memory (RAM/Flash): Many embedded systems run on microcontrollers with KBs of RAM, making large neural networks impractical.

- Low Compute Power: Without GPUs or NPUs, inference must run efficiently on CPUs or DSPs.

- Power Consumption: Battery-operated devices require energy-efficient models.

B. Real-Time Requirements

- Some applications (e.g., motor control, sensor fusion) require deterministic latency.

- ML inference must meet strict timing constraints without jitter.

C. Non-Ideal Data Conditions

- Sensor noise, missing data, and environmental variations affect model performance.

- Training data may not fully represent deployment conditions.

D. Model Drift and Long-Term Stability

- Over time, sensor degradation or changing environments can reduce accuracy.

- Models must be validated for long-term reliability.

Given these challenges, testing must go beyond traditional software validation to ensure models perform reliably under real-world conditions.

Data Collection and Preprocessing for Embedded ML

A. Representative Dataset Collection

- Collect data from the actual hardware sensors (not just simulations).

- Include edge cases (e.g., sensor failures, extreme temperatures).

- Ensure diversity in training data to avoid bias.

B. Data Augmentation for Robustness

- Simulate noise, distortions, and missing data to improve generalization.

- Use techniques like time warping for time-series data.

C. Quantization and Normalization

- Preprocess data to match the embedded system’s numerical precision (e.g., 8-bit fixed-point).

- Normalize inputs to the same range used during training.

Model Optimization for Embedded Deployment

A. Model Pruning and Compression

- Remove redundant neurons/weights to reduce size.

- Use techniques like weight clustering and knowledge distillation.

B. Quantization

- Convert floating-point models to fixed-point (INT8) for efficiency.

- Test quantized models for accuracy loss.

C. Hardware-Aware Neural Architecture Search (NAS)

- Automatically design models optimized for the target hardware.

- Balance accuracy, latency, and memory usage.

D. Choosing the Right Framework

- TensorFlow Lite for Microcontrollers (TFLM)

- ONNX Runtime for Embedded

- CMSIS-NN (for ARM Cortex-M)

4. Testing Methodologies for Embedded ML Models

A. Unit Testing for ML Models

- Test individual layers for numerical correctness.

- Verify tensor shapes and memory usage.

B. Integration Testing with Hardware

- Run inference on the actual device (not just emulators).

- Check for memory leaks and stack overflows.

C. Performance Benchmarking

- Measure latency, throughput, and power consumption.

- Compare against real-time deadlines.

D. Robustness Testing

- Inject noise and adversarial attacks to test stability.

- Validate under varying conditions (temperature, voltage fluctuations).

E. Edge Case Testing

- Test with out-of-distribution (OOD) data to ensure failsafe behavior.

- Simulate sensor failures (e.g., NaN values, dropped samples).

5. Validation Strategies for Real-World Performance

A. Cross-Validation on Embedded Hardware

- Use k-fold validation on real sensor data.

- Test across multiple hardware units to account for manufacturing variances.

B. Field Testing and A/B Testing

- Deploy multiple model versions in real environments.

- Monitor performance metrics (accuracy, latency, power).

C. Continuous Monitoring and Model Updates

- Implement online learning (if feasible).

- Use firmware-over-the-air (FOTA) updates for model improvements.

6. Tools and Frameworks for Testing Embedded ML

| Tool | Purpose |

| TensorFlow Lite Micro | Deploy and test ML on microcontrollers |

| STM32Cube.AI | Optimize NN models for STM32 MCUs |

| Zephyr OS | RTOS with ML inference support |

| QEMU Emulation | Test models without hardware |

| Valgrind / Memcheck | Detect memory leaks in embedded ML |

7. Case Studies and Lessons Learned

Case Study 1: Keyword Spotting on a Cortex-M4

- Challenge: Fit a speech recognition model in 128KB Flash.

- Solution: Used quantized TFLite Micro with pruning.

- Testing: Validated with real-time audio latency constraints.

Case Study 2: Predictive Maintenance on an Industrial PLC

- Challenge: Handle noisy vibration sensor data.

- Solution: Used data augmentation and robust PCA preprocessing.

- Testing: Deployed in a factory for 6 months to monitor drift.

Lessons Learned:

- Always test on real hardware early.

- Power consumption matters as much as accuracy.

- Model explainability helps debug edge cases.

Conclusion

Testing and validating ML models for embedded systems requires a specialized approach that accounts for resource constraints, real-time requirements, and environmental factors. By following structured methodologies—from data collection to hardware-in-the-loop testing—engineers can ensure their ML models are both accurate and reliable in production.

Key takeaways:

✔ Optimize models for memory and latency early.

✔ Test with real sensor data and hardware.

✔ Validate robustness against noise and failures.

✔ Continuously monitor performance post-deployment.

As ML becomes more prevalent in embedded systems, rigorous testing will be the difference between a successful deployment and a costly failure. By applying these best practices, embedded engineers can confidently integrate machine learning into their next-generation devices.