The demand for artificial intelligence (AI) at the edge is growing exponentially, driven by applications such as autonomous vehicles, industrial automation, and IoT devices. As edge devices require faster processing, lower latency, and energy efficiency, Field Programmable Gate Arrays (FPGAs) have emerged as a compelling choice for accelerating AI workloads. With their reconfigurable hardware and parallel processing capabilities, FPGAs can deliver tailored performance that balances speed, power consumption, and flexibility.

This article explores how FPGAs enable AI at the edge, delves into their benefits and challenges, and provides insights into designing FPGA-based AI solutions for embedded systems.

The Rise of AI at the Edge

Edge AI refers to running AI inference directly on edge devices rather than relying on cloud computing. This shift is fueled by the need for real-time decision-making and data privacy. Applications of edge AI include:

- Autonomous Systems: Real-time image recognition for self-driving cars.

- Smart Cities: Traffic management, facial recognition, and security systems.

- Healthcare: Portable diagnostic devices performing AI analysis on-site.

- Industrial IoT: Predictive maintenance and anomaly detection in factories.

Challenges of Edge AI

While edge AI offers numerous benefits, it also presents significant challenges:

- Power Constraints: Edge devices often operate on limited power sources.

- Latency: Real-time applications cannot tolerate high communication delays with the cloud.

- Compute Resources: Edge devices must balance performance with compact form factors.

This is where FPGAs shine as an enabler for edge AI.

Why FPGAs for AI at the Edge?

FPGAs are semiconductor devices that can be reprogrammed to perform specific functions after manufacturing. Unlike CPUs and GPUs, which have fixed architectures, FPGAs can be tailored to execute specific workloads efficiently. This makes them ideal for AI workloads at the edge.

Key Advantages of FPGAs for Edge AI

- Hardware Reconfigurability:

- FPGAs allow engineers to implement custom AI accelerators, optimizing performance for specific models and datasets.

- Reprogramming capabilities enable updates to AI algorithms without hardware changes.

- Low Latency:

- Unlike cloud-based solutions, FPGAs process data locally, reducing the latency of AI inference.

- Their parallel processing architecture ensures faster execution of AI models.

- Energy Efficiency:

- FPGAs consume less power than GPUs for many AI inference tasks, making them ideal for battery-powered edge devices.

- Power-saving modes allow dynamic adjustment of resource utilization.

- High Throughput:

- FPGAs can perform operations like matrix multiplication and convolution in parallel, enhancing throughput for tasks like image recognition.

- Scalability:

- FPGAs are available in a range of configurations, from low-cost chips for simple AI tasks to high-end devices for advanced deep learning models.

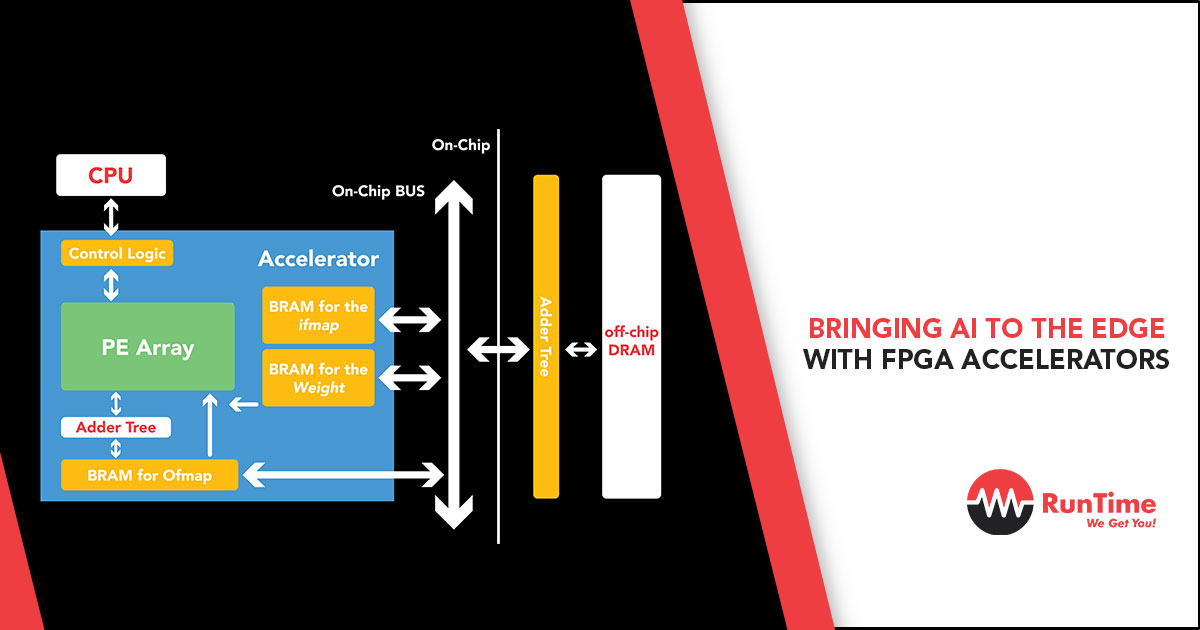

FPGA AI Architecture

To understand how FPGAs accelerate AI workloads, let’s break down a typical architecture for deploying AI on FPGAs.

1. AI Model Representation

AI models, particularly deep neural networks (DNNs), consist of layers such as convolutions, pooling, and fully connected layers. FPGAs accelerate these operations using custom logic.

2. Dataflow and Parallelism

- Parallel Processing: FPGAs leverage multiple processing elements to handle operations like matrix multiplications in parallel.

- Pipelining: Tasks are divided into stages, enabling data to flow continuously through the system without bottlenecks.

3. Fixed-Point Arithmetic

FPGAs often use fixed-point representation instead of floating-point to reduce resource usage and improve performance for AI inference.

4. On-Chip Memory

FPGAs use on-chip BRAM (Block RAM) to store weights, biases, and intermediate results, reducing latency compared to accessing external memory.

Common FPGA Frameworks for AI

Deploying AI models on FPGAs can be challenging without the right tools. Fortunately, several frameworks simplify this process:

1. Xilinx Vitis AI

- Supports Xilinx FPGAs.

- Provides a pre-optimized library of AI models.

- Includes tools for quantization and model compilation.

2. Intel OpenVINO

- Optimized for Intel FPGAs.

- Streamlines deployment of AI models trained on popular frameworks like TensorFlow or PyTorch.

- Enables heterogeneous inference across CPUs, GPUs, and FPGAs.

3. OpenCL for FPGAs

- Allows engineers to describe AI accelerators using high-level programming languages.

- Enables portability across FPGA platforms.

4. Custom HDL Design

- Engineers can use Verilog or VHDL to design custom AI accelerators.

- Offers maximum flexibility but requires deep expertise in FPGA design.

Steps to Implement AI on FPGAs

Deploying AI models on FPGAs involves several key steps:

1. Select the FPGA Platform

Choose an FPGA that matches the application’s compute, memory, and power requirements. Popular FPGA families include:

- Xilinx Zynq UltraScale+: Ideal for high-performance AI applications.

- Intel Stratix 10: Offers advanced AI capabilities for edge computing.

- Lattice ECP5: Suitable for low-power IoT devices.

2. Optimize the AI Model

Optimize AI models for FPGA deployment to balance accuracy and performance:

- Quantization: Convert weights and activations to lower precision (e.g., INT8) to reduce resource usage.

- Pruning: Remove insignificant connections in the model to reduce its size.

- Compression: Use techniques like weight sharing and sparsity to minimize memory usage.

3. Map the Model to FPGA

Use FPGA frameworks or HDL to map the model to FPGA hardware:

- Leverage pre-built libraries for standard layers (e.g., convolution, ReLU, pooling).

- Customize accelerators for unique AI operations.

4. Verify and Test

Thorough testing ensures the FPGA implementation matches the accuracy and performance of the original model:

- Simulation: Use FPGA simulators to validate the design before deployment.

- In-Hardware Testing: Test the model in the target environment to ensure real-world performance meets expectations.

5. Deploy and Update

Deploy the FPGA-based solution in the field and use reconfigurability to update AI models as needed:

- Remote updates can extend the product lifecycle without hardware changes.

Applications of FPGA-Accelerated Edge AI

1. Autonomous Vehicles

- Object detection, lane departure warning, and pedestrian tracking require real-time AI inference.

- FPGAs handle image processing tasks with low latency, ensuring safety-critical decisions.

2. Smart Cameras

- Facial recognition, license plate detection, and anomaly detection are common edge AI applications.

- FPGAs enable on-device processing, reducing bandwidth usage and ensuring privacy.

3. Industrial IoT

- Predictive maintenance uses AI models to analyze sensor data and predict equipment failures.

- FPGAs process large volumes of data in real time, improving operational efficiency.

4. Medical Devices

- Portable ultrasound machines and diagnostic tools leverage FPGA-accelerated AI for real-time analysis.

- Low power consumption is critical for battery-operated devices.

Challenges in Using FPGAs for AI at the Edge

While FPGAs offer numerous advantages, engineers must navigate certain challenges:

1. Design Complexity

- FPGA design requires expertise in hardware description languages and optimization techniques.

- Tools like OpenCL and Vitis AI mitigate complexity but may introduce learning curves.

2. Resource Constraints

- FPGAs have limited on-chip memory and compute resources compared to GPUs.

- Engineers must carefully manage resources to fit AI models within hardware constraints.

3. Cost

- High-end FPGAs can be expensive, although costs are declining with wider adoption.

- The total cost of ownership, including development tools and time, must be considered.

4. Toolchain Fragmentation

- FPGA development tools are often vendor-specific, limiting portability across platforms.

- Open standards like OpenCL aim to address this but aren’t universally adopted.

The Future of FPGA-Accelerated AI at the Edge

As AI and edge computing continue to grow, FPGAs are evolving to meet emerging demands. Future trends include:

1. AI-Specific FPGA Architectures

- FPGA vendors are introducing AI-dedicated features, such as Tensor Processing Blocks (TPBs) for efficient matrix operations.

2. Increased Automation

- Enhanced design tools will further abstract FPGA programming, making it accessible to software engineers.

3. Integration with Other Technologies

- Combining FPGAs with CPUs, GPUs, or custom ASICs in heterogeneous systems will maximize performance.

4. Focus on Sustainability

- FPGAs’ reconfigurability and low power consumption align with the industry’s emphasis on sustainable, energy-efficient solutions.

Conclusion

FPGAs are transforming the deployment of AI at the edge by offering unmatched flexibility, low latency, and energy efficiency. As edge AI applications continue to expand, the ability to leverage FPGA accelerators will be a critical skill for embedded engineers. While challenges remain, advancements in FPGA technology and development tools are making it easier than ever to harness their potential.

For embedded engineers, mastering FPGA-based AI design isn’t just a competitive advantage—it’s a step toward enabling the intelligent, connected systems of tomorrow.